Episode 1: NLU and Error Handling

Flashback – Scene 4: Question Answering and Following TED

As I mentioned in the first post, the goal with the Q&A flow was that the user could ask a question about Covid-19 and we would display the answer returned by Mila’s model API. There have been multiple versions of this portion of the application, from very basic to quite complex, and it was integrated in more and more places in the dialogue.

In the first version of the question-answering flow, the user had to choose the “Ask question” option in the main menu or after an assessment, and then we collected the question. We planned for four possible outcomes of the question-answering API call (the fourth was still not implemented in the model when our participation in the project ended):

- Failure: API call failed

- Success: API call succeeded and the model provided an answer

- Out of distribution (OOD): API call succeeded but the model provided no answer

- Need assessment: API call succeeded but the user should assess their symptoms to get their answer

If the outcome was a success, Chloe would ask an additional question to know if the answer was useful (the chat widget we used did not provide any kind of thumbs-up/thumbs-down UI to easily skip this interaction). If the outcome was OOD, the user was asked to reformulate.

Collecting the question, the reformulation, and the feedback would be done in a form, but where and how to implement the transitions to the other flows was not so clear. There were 6 different transitions after the Q&A flow depending on the outcome and the presence of the “self_assess_done” slot we described earlier. We vaguely thought about asking the user what they wanted to do next inside the form, to keep Q&A flow logic centralized, but the idea was discarded as we came up with no clean way to implement it, and that’s why we ended up relying on stories and the TED policy to predict the deterministic transitions.

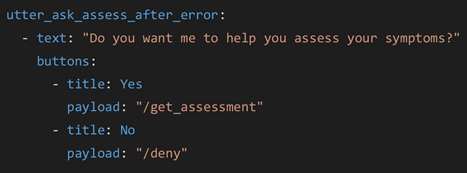

We were also confronted with the problem that some of these transitions were “affirm/deny” questions, “affirm” either leading to an assessment or to asking another question. At this point, our basic assessment stories started directly with “get_assessment”, as a shortcut for memoization, and starting a story with “get_assessment OR affirm” would obviously lead to unwanted matches. We put off this inconvenience with a solution that only worked because we controlled the user input through buttons. Like this:

An intent shortcut with buttons

This way, we did not have to add stories of assessments following a question-answering, but with a step back we should have done it then, since adding stories with Q&A following an assessment worked (mostly) well, and we had to do it anyway when adding NLU.

Flashback – Scene 5.25: Daily Check-In Enrollment Detours

The daily check-in enrollment flow design had been augmented and included unhappy paths. These had to be addressed since the phone number and validation code (added in this version) were collected directly from user text. These are the cases we addressed:

- Phone number is invalid

- User says they don’t have a phone number

- User wants to cancel because they don’t want to give their phone number

- Validation code is invalid

- User did not receive the validation code and wants a new one sent

- User did not receive the validation code and wants to change the phone number

Some of these are something between a digression and error-handling, and we thought of implementing them as “controlled” digressions, as we had already done for the pre-conditions explanations, which went as follows:

But since most of them involved error counters, error messages, or more complexity, we decided to manage them all inside the form instead of separating the logic between forms, stories and intent mappings. It did have downsides (other than the hundreds of lines of additional code): some logic happened over multiple interactions and we had to add many slots for counters and flags to keep track of the progression (our final version of the form uses ten such slots).

Flashback – 5.75: Stopping for a Special Class for TED

After some tests, it was brought to our attention that the user could get stuck in a Q&A OOD loop since we only gave the option to reformulate. The design was changed so that the user could either retry or exit Q&A, and we added 2 more transitions for this case.

Adding these, we hit a thin wall: the TED policy did not learn the correct behaviour after Q&As: it mixed up the impacts of the “question_answering_status” and “symptoms” slots. Re-distributing the Q&A examples equally between assessments with no, mild or moderate symptoms was a clerk’s work, but it worked, and in the end, the policy predicted the correct behaviour on conversations that were not in our stories.

Scene 6: Implementing Testing Sites Navigation on Autopilot

Testing sites navigation, after wrestling with Q&A transitions and daily-ci enrollment error-handling, brought no new challenge. The flow consisted of three major steps:

- Explain how it works and ask if the user wants to continue

- If so, collect their postal code and validate its format and existence, cancelling after too many errors

- Display the resulting testing sites or offer a second try if there were none.

Coherently with our previous implementations, we used a form to collect the postal code and handle errors, make the API calls and offer the second try, and stories to display the explanations and transition to other flows. The transitions, again, varied depending on the API call outcome and on the “self_assess_done” slot.

Scene 7: Exploring the Sinuous Path of NLU

When we finally got to the end of the feature list the fast buttons-no-error-handling way, we could explore integrating NLU and handling unhappy paths. We started with the first input/main menu as a test. Anything that was not part of the options would be sent to the Q&A API, but due to the non-contextual NLU in Rasa, and the fact that we expected a large variety of questions for the Q&A, this “anything”, could be any intent, with any score. “How will we handle all these intents?” was not as trivial as it might seem.

Option 1: Add examples in stories

The straightforward path was to add stories with unsupported intents and the error behavior (directing to the Q&A form), but how many examples would it take? The TED policy could not be expected to learn to use these error examples as catch-alls, and using ORs to include all unsupported intents would have multiplied the training time exponentially as soon as we applied this approach to other cases. This path was a dead-end.

Option 2: Core fallback

If we did not include the unsupported intents in stories, the TED policy would still predict something, but we could hope for the confidence score to be low, and set a threshold to trigger a fallback action. The action would replace the intent by “fallback”, and we could manage this one intent in the stories. But our expected behaviors did not all have very good scores, some not so far from what a misplaced “affirm” could get, since it was in many stories. Thus we did not want to depend on a threshold to trigger the fallback.

The solution: Unsupported intent policy

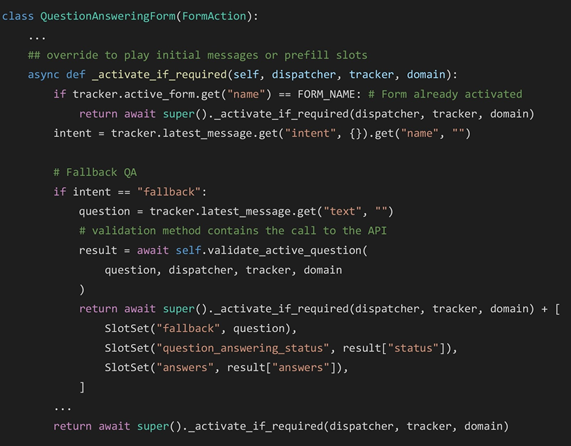

We ended up using the “fallback” intent idea, but with a deterministic policy. The policy predicted the action to replace the intent if the latest relevant action before the user input was the main-menu question and the intent was not in the list of supported ones. Stories and memorization were used to trigger the Q&A form and manage the peculiar transitions after it (call failure and OOD were followed by a main menu error message instead of the regular messages). To achieve this, the Q&A form was modified to pre-fill the question slot with the last user input if the intent was “fallback”:

Using the trigger message in the question answering form

Scene 8: Further Explorations

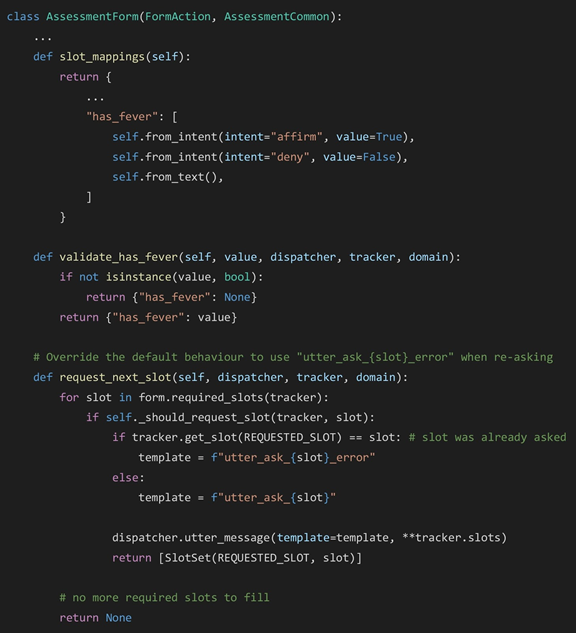

As a second step, we added NLU in yes-no questions, which, per design, simply triggered a reformulated question with buttons and no text field. The majority of those were in forms, some with exceptions to the “utter_ask_{slot_name}” message convention. The exceptions also applied to the error messages, thus a generic approach that wouldn’t even apply to all cases seemed too complicated for the benefits of it, and we did not spend time thinking about one. It seemed simpler and faster to just manage everything in the forms like this:

Intermission: Losing the Feedback Phantom Trailing Behind Us

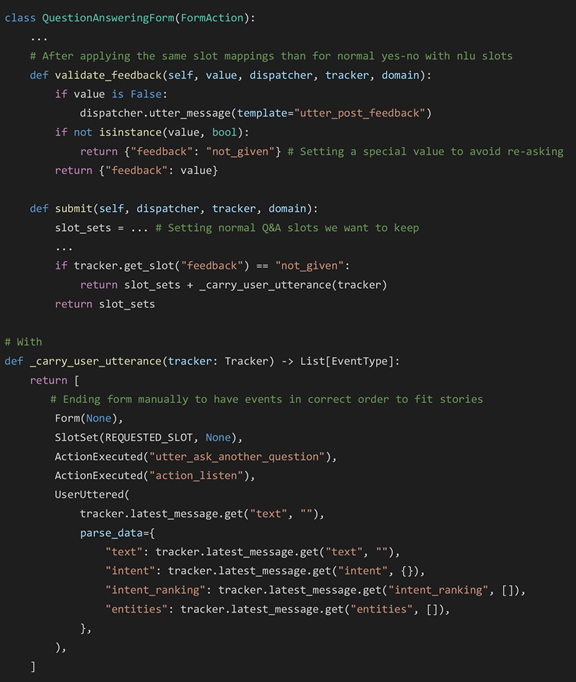

Adding NLU, and consequently flexibility, we were reminded of the mandatory and cumbersome feedback interaction that still haunted us, and decided to make it more flexible, too. We still didn’t have a feedback widget or time to implement one, so we kept the question, but adapted the reaction: if the user answered something other than “affirm/deny”, it would be treated as if they were already in the next question, which offered to ask another question and could lead to the other functionalities. This required a bit of gymnastics to preemptively exit the form and “reproduce” the user input:

Scene 9: Final Sprint to Add NLU

Since we already had the policy to replace intents with “fallback”, error-handling outside forms was mostly a matter of adding entries to the dictionary of latest action-supported intents, and stories to react to the “fallback” intent, either by entering the Q&A form or displaying an error message to follow the design. Inside forms, we applied the same approach as for yes-no questions. We were forced to make some collateral changes, like adding a province entity, or adding stories (mostly ORs though) to manage the transitions where “affirm” or “deny” were valid (now that the buttons shortcut was unavailable). We also had to backtrack on our cleanly handled pre-conditions digression since the simple mapping policy solution could not apply with error-handling, and managed it inside the form like everything else.

The end

Looking back, even though we added NLU, it seems like we took a lot of shortcuts, a lot of not-so-rasa-esque approaches. Our use case, completely predictable, with no random navigation, full of exceptions and tiny variations, did not correspond to a typical Rasa use case. We wrestled with lots of obstacles that come naturally when trying to implement a boxes-and-arrows design with Rasa. But Rasa offers flexibility through code and possible additions, and in the end, we often chose code to represent dialogue patterns because when time is short, the road we know is the safest way to end up where we want.

In a further installment, we will dive deeper into the different ways to implement two of the features of a boxes-and-arrows design, i.e. decision trees and dialogue modularity, that are hard to implement with Rasa, and the various methods to do so. We will also explore if and how Rasa 2.0, still in the alpha stage at the moment of writing, can make this task easier.